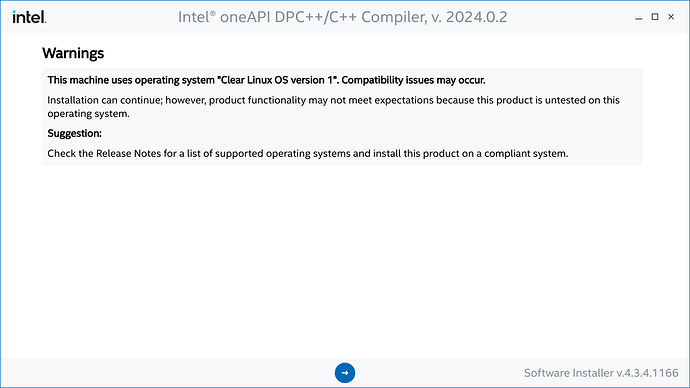

The message states that oneAPI DPC++/C++ Compiler is untested on Clear Linux OS. Both products are made by Intel.

I tested Intel’s C (icx) and C++ (icpx) compilers including sycl targeting the NVIDIA GPU.

Although the oneAPI CUDA plugin prefers CUDA 12.x, I installed CUDA 11.8 because this one works with Numba and Codon.

$ ONEAPI_DEVICE_SELECTOR="cuda:*" SYCL_PI_TRACE=1 ./simple-sycl-app

SYCL_PI_TRACE[basic]: Plugin found and successfully loaded: libpi_cuda.so [ PluginVersion: 12.27.1 ]

SYCL_PI_TRACE[all]: Requested device_type: info::device_type::automatic

SYCL_PI_TRACE[all]: Selected device: -> final score = 1500

SYCL_PI_TRACE[all]: platform: NVIDIA CUDA BACKEND

SYCL_PI_TRACE[all]: device: NVIDIA GeForce RTX 3070

The results are correct!

No changes are needed to the CUDA installation described here.

Any performance gains with icx/icpx ?

I came across this post because I want to start getting into AI work and use NVIDIA GPU. I will only be SSHing into this box where I would like to setup Clear Linux because I believe it is the fastest and not have a GUI install at all.

Do I still need to install the NVIDIA driver if I just want to use it for AI work, i.e. bytes in and bytes out, and no UI use-case per se.

I think I do, but I thought I’d ask.

Yes. The answer is found here.

- “To build an application, a developer has to install only the CUDA Toolkit and necessary libraries required for linking.”

- “In order to run a CUDA application, the system should have a CUDA enabled GPU and an NVIDIA display driver that is compatible with the CUDA Toolkit that was used to build the application itself.”

Intel® oneAPI DPC++/C++ Compiler, v. 2024.0.2 (download the Stand-Alone version).

Pre-requisite: Install the c-basic bundle including devpkg-boost.

sudo swupd bundle-add c-basic devpkg-boost

Install oneAPI DPC++/C++ Compiler.

bash l_dpcpp-cpp-compiler_p_2024.0.2.29_offline.sh

Interestingly, the installer is unable to detect g++ via the c-basic bundle or that g++ is in our path or exists in /usr/bin.

The out-of-the-box experience is broken on Clear Linux using OpenMP technology, via the -fopenmp C/C++ option. Perform the following steps to fix OpenMP (cannot find omp.h header). Note: Alter path accordingly for future versions.

cd ~/intel/oneapi/compiler/2024.0/lib/clang/17/include

ln -s ../../../../opt/compiler/include/omp.h .

ln -s ../../../../opt/compiler/include/omp_lib.h .

ln -s ../../../../opt/compiler/include/omp-tools.h .

Clear Linux no longer provides libiomp5.so, since CL 39970. Copy the OpenMP runtime library to /usr/local/lib64/.

cd ~/intel/oneapi/compiler/2024.0/lib

sudo mkdir -p /usr/local/lib64

sudo cp -a libiomp5.so /usr/local/lib64/.

sudo chmod 755 /usr/local/lib64/libiomp5.so

cd ~

Update the dynamic linker to search /usr/local paths. Omit step if you have this, already.

sudo mkdir -p /etc/ld.so.conf.d

sudo tee "/etc/ld.so.conf.d/local.conf" >/dev/null <<'EOF'

/usr/local/lib64

/usr/local/lib

EOF

if ! grep -q '^include /etc/ld\.so\.conf\.d/\*\.conf$' /etc/ld.so.conf 2>/dev/null

then

sudo tee --append "/etc/ld.so.conf" >/dev/null <<'EOF'

include /etc/ld.so.conf.d/*.conf

EOF

fi

Refresh the dynamic linker run-time cache.

sudo ldconfig

On non-Intel systems, the C/C++ -axCODE option does not work. Instead, use the -xHost option for best performance. “That tells the compiler to generate instructions for the highest instruction set available on the compilation host processor.”

. ~/intel/oneapi/setvars.sh --include-intel-llvm

clang++ -O3 -xHost ...

clang++ -O3 -xhaswell

clang++ -O3 -xcore-avx2 ...

clang++ -O3 -xskylake-avx512 ...

clang++ -O3 -xx86-64-v2 (or v3, v4) ...

clang++ -O3 -xcommon-avx512 ...

oneAPI for NVIDIA® GPUs, v. 2024.0.1 (click on the Download icon at the top of the page).

Pre-requisite: Install CUDA 12.2.

bash ./install-cuda 12.2

Install oneAPI DPC++ CUDA® plugin.

bash ./oneapi-for-nvidia-gpus-2024.0.1-cuda-12.0-linux.sh

There is also oneAPI for AMD GPUs, to target AMD GPUs.

oneAPI Monte Carlo Pi Demonstration

Building a binary targeting the CPU (spir64) and GPU (nvptx64-nvidia-cuda).

unset CFLAGS

unset CXXFLAGS

unset FCFLAGS

unset FFLAGS

. ~/intel/oneapi/setvars.sh --include-intel-llvm

icpx -fsycl -fsycl-targets=spir64,nvptx64-nvidia-cuda -O2 -g -DNDEBUG monte_carlo_pi.cpp -o monte_carlo_pi -lOpenCL -lsycl

By default, the binary runs on the GPU if both CPU and GPU targets are built.

./monte_carlo_pi

ONEAPI_DEVICE_SELECTOR="ext_oneapi_cuda:*" ./monte_carlo_pi

ONEAPI_DEVICE_SELECTOR="opencl:cpu" ./monte_carlo_pi

Some success after all. ![]()

ONEAPI_DEVICE_SELECTOR="ext_oneapi_cuda:*" ./monte_carlo_pi

Calculating estimated value of pi...

Running on NVIDIA GeForce RTX 3070

The estimated value of pi (N = 10000) is: 3.1388

Computation complete. The processing time was 0.0536164 seconds.

The simulation plot graph has been written to 'MonteCarloPi.bmp'